Avsr AI is building Avsr Brain framework as the intelligence layer for robots to enable true robotic autonomy.

What does true robotic autonomy mean?

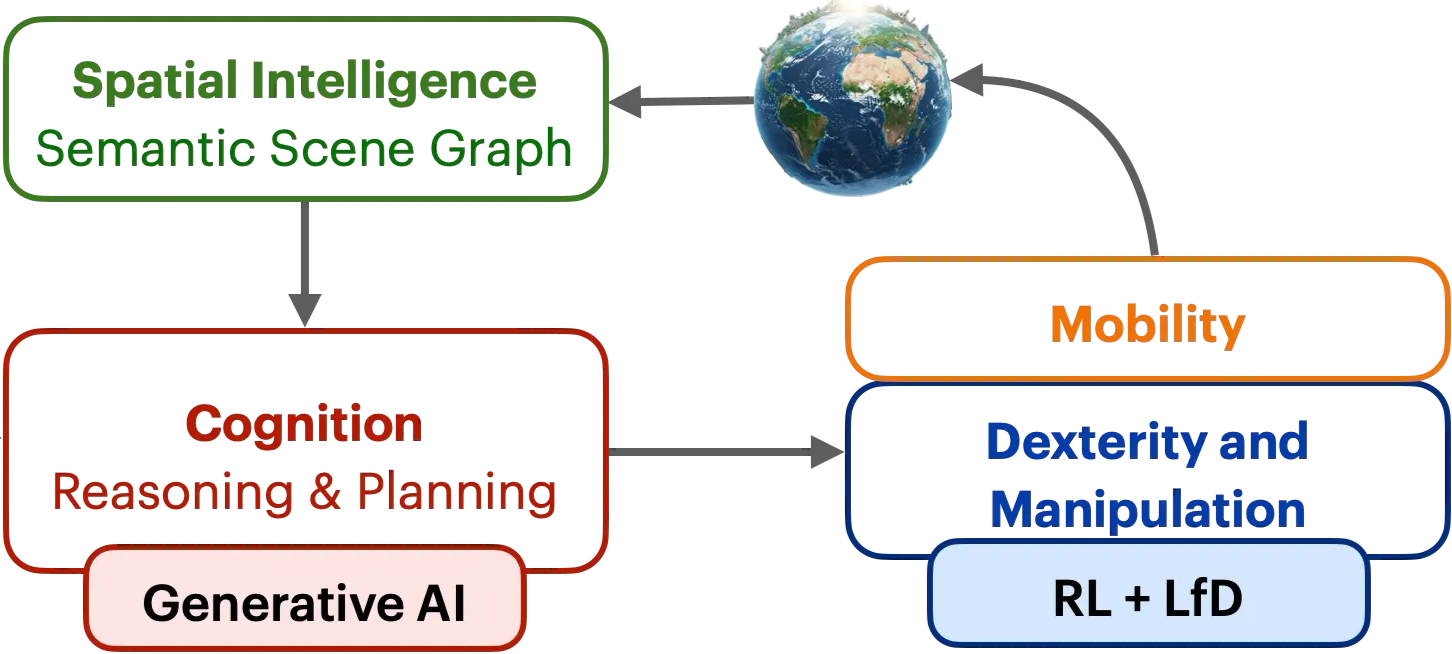

Achieving true robotic autonomy in dynamic environments requires that we solve three main problems: perception, cognition, and action. In other words, robots should be able to not only observe the world around them, but also understand it in a way similar to humans. Then, they should be able to think and reason on their observations so that they can plan about how to achieve a given task. And finally, they should be able to take a variety of actions to fulfill the planned tasks, and ideally quickly learn to perform new tasks.

Avsr Brain

Avsr Brain is a modular, real-time autonomy framework that integrates perception, cognition, and action. It enables robots to:

- Understand their environment using Spatial Intelligence

- Real-time, low-compute, on-device autonomy

- Multi-agent robot-robot/human-robot collaboration

- Execute actions with low-latency, on-device control

- Extensible with apps/skills

This architecture supports a wide range of robot types—from drones to humanoids—and adapts to new tasks and environments with minimal retraining.

Avsr Brain Key Components

1. Novel Perception and Spatial Intelligence

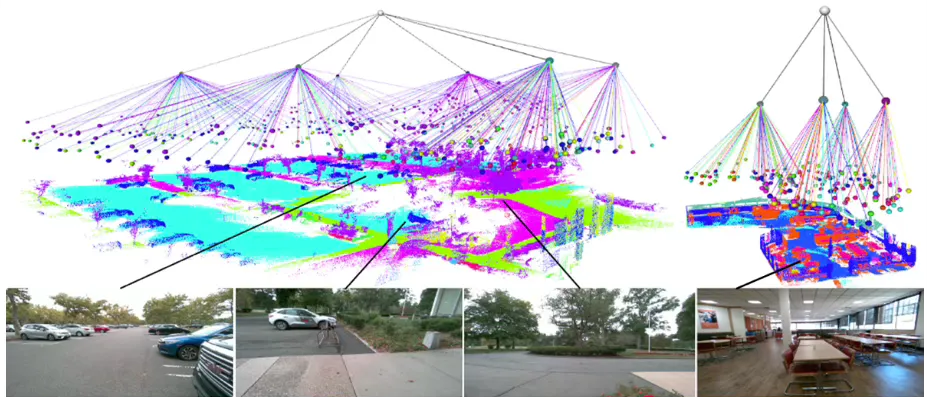

To enable true autonomy, robots need a compact, efficient way to represent their environment. This representation must also be compatible with cognitive models like LLMs for reasoning and planning. Robots, like humans, can’t rely solely on what they currently see. They need memory to act intelligently—such as recalling where they last saw an object to complete a task efficiently. Therefore, this should support both real-time perception and long-term memory. However, storing and processing large-scale visual data is resource-intensive and computationally expensive for Gen-AI models.

An illustrations of Semantic scene graphs, depicting identified objects and their relationships.

Avsr AI solves this with its patented Spatial Intelligence system, which uses proprietary 3D Semantic Scene Graphs, allowing robots to capture object properties and relationships in a structured, hierarchical 360° graph. SSGs are lightweight, explainable, and optimized for real-time reasoning. They serve as both a perception interface and a memory store, enabling robots to understand and act in complex environments with human-like awareness—without sacrificing speed or accuracy.

2. Modular, Multi-Layered Intelligence Architecture

Avsr AI Brain adopts a systematic and composable multi-layered system architecture that separates high-level reasoning from low-level control yet creating a unified framework where different intelligence components—e.g., perception, cognition, and action—work together. A High-Level Planner is responsible for language and task understanding, building and reasoning over 3D SSGs, and long-horizon planning by decomposing tasks into a sequence of actionable sub-tasks and goals. A Low‑Level Planner executes each goal with optimized motion and manipulation models.

A simplified depiction of Avsr AI Brain: A robot observes its environment, perceives and interprets the observations, and encodes this understanding into a proprietary scene graph. This is then processed by a grounded LLM to plan multi-step tasks. Finally, a low-level planner executes the physical actions using a suitable AI model. All components operate synchronously and in real time—enabling intelligent, context-aware behavior.

By building Spatial and Cognitive Intelligence layers on top of typical action models, such as vision-language-action (VLA), our approach reduces the computational and training load on the low-level planner—so training is faster and more stable, and motor‑control updates no longer corrupt language or scene representations . The high‑level planner also continually refines plans in real time, making execution robust to unexpected outcomes.

Avsr AI bridges perception and action with explicit Spatial Intelligence and layered reasoning, and delivers true context‑aware autonomy in dynamic environments.

A Complete, Scalable Stack for the Future of Robotics

Avsr AI is building a full-stack autonomy platform designed to evolve with the needs of robotics. The Avsr AI Brain effortlessly scales across industry verticals by swapping in domain‑specific model on top of a unified core. Our modular architecture supports a wide range of form factors—from drones and quadrupeds to bi-manual humanoids—allowing builders to start simple and scale up as use cases grow. Whether it's enabling autonomous navigation in retail today or adding complex manipulation tasks like shelf restocking tomorrow, Avsr AI uniquely delivers the flexibility, intelligence, and depth required to power the next generation of robotic systems.

Example applications of our technology

Spatial Intelligence at Human-Level Understanding

Our Spatial Intelligence Product enables robots to perceive and interpret their surroundings with a degree of understanding comparable to humans. For instance, the system can reason that "a cup is to the right of the TV table", allowing for intuitive object-location referencing and navigation in dynamic environments.

Applications include:

- Home and Care-home Robotics: Supporting elderly care, medication delivery, and autonomous assistance.

- Autonomous Drones: Enabling situational awareness and real-time navigation for inspection, logistics, and defense scenarios.

Intelligent Manufacturing Automation

In industrial settings, our technology merges advanced robotics intelligence with state-of-the-art computer vision to automate complex manipulation tasks. These include bin picking, assembly, machine tending, and quality control, especially in environments where traditional robots fail due to unpredictability or task variability.

We are actively collaborating with several manufacturing partners to integrate our system into next-generation production lines, unlocking efficiency and scalability previously unattainable for small and medium manufacturers.

Toward General-Purpose Humanoid Robots

We are also extending this technology stack to power bimanual humanoid robots, enabling general-purpose functionality. Our team is currently working with multiple humanoid robot manufacturers to integrate Dex-AI and spatial reasoning capabilities into their platforms. This collaboration is accelerating the development of safe, intelligent humanoid assistants for industrial, service, and domestic environments.

Our approach is a first-of-its-kind integration of embodied AI, spatial reasoning, and on-device generative task planning in real-world robotics systems. This enables robots not only to act but also to think, making them more useful, accessible, and cost-effective across a wide range of applications.