Cognix — Spatial and Cognitive Intelligence

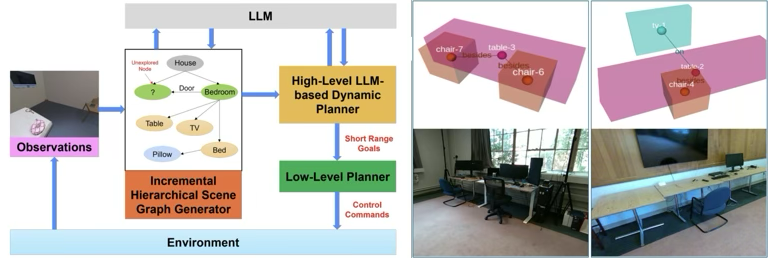

Avsr AI has developed a unique framework that combines Generative AI and Semantic Scene Graphs (SSG) based Spatial Intelligence to enable human-like perception, planning, and reasoning in robots. This technology allows robots to to perceive, map, and navigate physical spaces using sensors like cameras, LiDAR, and depth sensors, and use Generative AI based models for natural language understanding, reasoning, and context-aware decision-making based on the user’s commands or behavior. The framework integrates several AI breakthroughs; we describe some of these components in more detail below.

- World Observation and Understanding: The framework enables robots to achieve spatial awareness by autonomously exploring their environment and constructing a 360° hierarchical semantic scene graph. This representation captures objects, their positions, and topological relationships. A proprietary Generative AI-based LLM adds semantic context for context-aware tagging and self-localization. The result is a rich, 360° soft-map of the world that integrates both spatial and semantic understanding.

Examples: “The parcel is on the desk in the back office on the 10th floor”, “a red vehicle is parked near the entrance of the warehouse”, “a person standing to the right of a blue container”.

- This spatial intelligence can be applied to both indoor and outdoor environments, including security robots operating indoors and drone robots navigating and identifying objects in open spaces.

- Dynamic Mapping with Real-time Updates: As the robots explores a new environments, they can build the SSG and 3D map automatically using data from available sensors, such as LiDAR or RGB-D cameras, eliminating the need for pre-existing maps. The graph is updated in real-time by adding or updating newly identified objects or changes. Using SSGs, robots can track 100s of objects with several hundred different types of relationships between them.

- Generative AI based Reasoning: The SSG is continuously fed into a proprietary LLM to reason and plan about next steps. The LLM creates a high level plan about what the robot needs to do next, which is then broken down into short-range goals for the low-level planner, i.e., the system controlling robot’s body. This results in a completely autonomous system capable of real-time adaptation and planning in a dynamic environment.

- Large area understanding and Memory: Enables robots to build a map encompassing large geographical areas, multi-story buildings, multi-city blocks, consisting of all the relevant objects that it has seen, their relationships. The Semantic Scene Graph (SSG) acts as the robot’s long-term memory, allowing it to recall previously seen objects and locations. At any moment, the robot can localize itself within this map and reason about both its current surroundings and past observations. This memory-driven planning gives the robot human-like autonomy and makes it suitable for deployment across expansive and complex environments.

Examples and Demos:

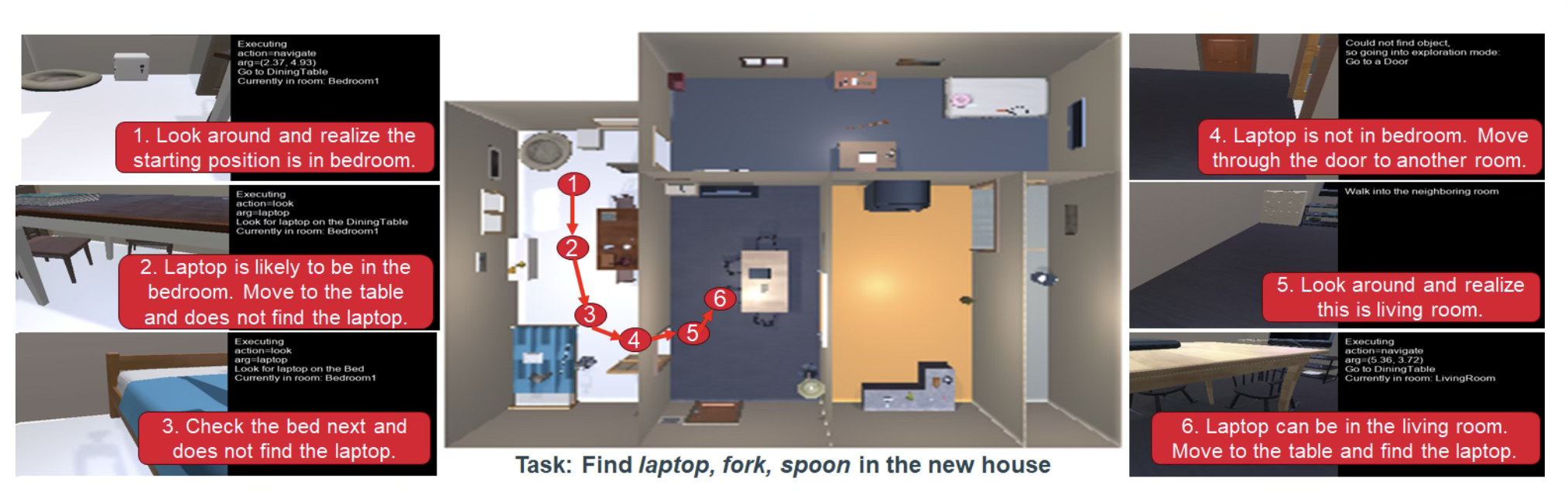

The image below, taken from a simulation environment, shown an example inside a home. The robot is tasked with finding a laptop, a fork and a spoon — in any order possible for quickest result.

Avsr Cognix Demo with Explanation

Simulated Home Environment

Another example inside a simulated home environment is shown below: